How Does a Software Company Help Improve Your Project?

What does a software company actually do?

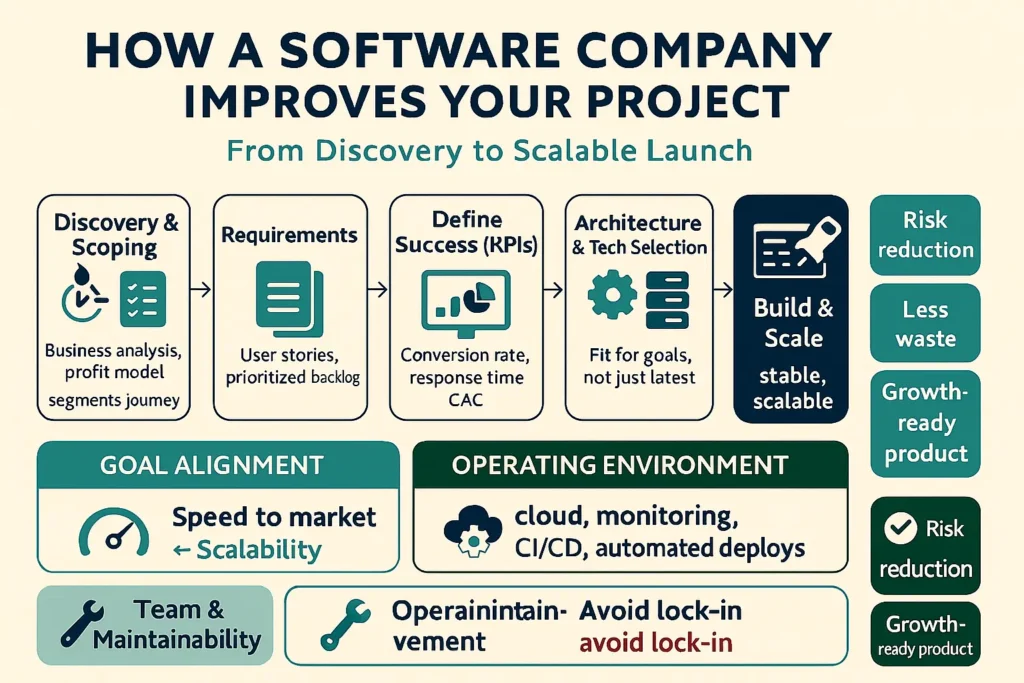

A professional software company doesn’t just write code; it builds an integrated system for your product’s success. It starts by analyzing the problem and modeling the solution, then selecting the appropriate technologies, and ends with a stable, scalable launch. The result: reduced technical risks, minimized time and money waste, and turning your vision into a usable, growth-ready product.

Turning goals into clear requirements (Discovery & Scoping)

- Business analysis: understanding the profit model, customer segments, and digital journey.

- Requirements gathering: turning ideas into User Stories and prioritized feature lists.

- Defining success: KPIs such as conversion rate, response time, and acquisition cost.

Choosing the right technology — not just “the latest”

Selecting a framework or database isn’t random. A software company evaluates:

- Alignment with goals: Do you need high go-to-market speed or maximum scalability?

- Operating environment: compatibility with a cloud infrastructure, monitoring tools, and deployment automation.

- Your internal team: what can they maintain later? Avoid being “locked in” to a technology no one masters.

The practical value a software company adds

- Shortening time-to-market through rapid prototyping and iterative releases.

- Reducing total cost of ownership (TCO) with clean architecture, flexible tooling, and test automation.

- Improving quality via coding standards, code reviews, and strict acceptance testing.

- Risk management with technical risk maps, fallback plans, and post-launch performance monitoring.

When is hiring a software company a decisive move?

- A market-ready idea that needs an MVP within 8–12 weeks.

- A technically troubled project: poor performance, messy codebase, team conflicts.

- Rapid growth requiring scalability: rising user counts, external integrations, security requirements.

Ideal working phases with a software company

- Discovery & planning: workshops, roadmap, prioritized features.

- Design & prototyping: interactive mockups, user flows, interface identity.

- Agile development: weekly sprints, Kanban boards, full transparency.

- Testing & quality assurance: unit/integration/performance/security.

- Launch & monitoring: real-time monitoring, analytics, alerts.

- Continuous improvement: feedback loops, A/B testing, quarterly development roadmap.

An agile methodology that multiplies results

Working with Scrum/Kanban enables high flexibility and incremental value delivery, with periodic reviews to adjust priorities based on real data—not assumptions.

Building a scalable product — from architecture to security and compliance

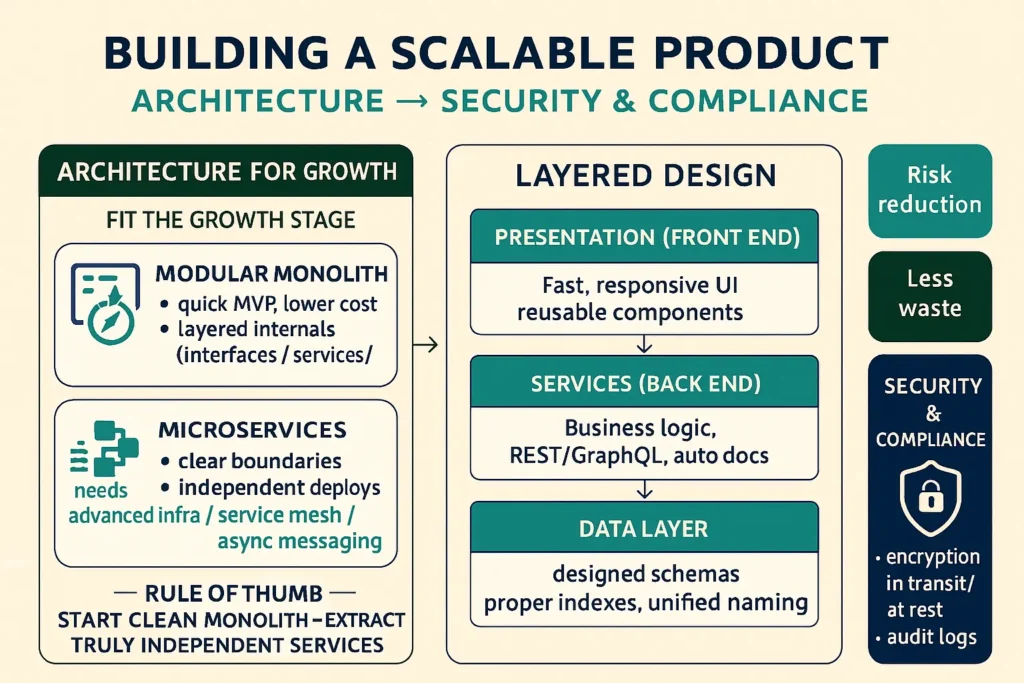

An engineering architecture that fits the growth stage

An experienced software company doesn’t pick a tech “trend” without reason; it determines the architecture that suits your current stage and upcoming plan.

Smart monolith or microservices?

- Modular Monolith:

Suitable for launching an MVP quickly and at a lower cost, with internal separation between layers (interfaces—services—data repositories). It reduces operational complexity and lets you focus on product–market fit. - Microservices:

Suitable when service boundaries and traffic patterns are clear, and you need independent deployments per service and distributed teams. It requires advanced infrastructure, precise observability, and managing inter-service communication (Service Mesh/Async Messaging).

Rule of thumb: start with a clean monolith that can be split later, then extract the services that truly need independence.

Clear layers with separation of concerns

- Presentation layer (Front End): fast, responsive, interactive interfaces; reusable component architecture.

- Services layer (Back End): clear business logic, organized REST/GraphQL APIs, automatic documentation.

- Data layer: well-designed schemas, appropriate indexes, and unified naming conventions.

Data architecture — because performance starts at the table

Choosing the right database

- Relational (PostgreSQL/MySQL): precise transactions, strong consistency, complex reporting.

- NoSQL (Document/Key-Value): flexible structures, high read/write speed for certain use cases.

- Hybrid (Polyglot Persistence): using more than one engine depending on data/workload type.

Scaling before you need it

- Read/write separation: read replicas, queues for heavy operations.

- Caching: in-application layers + Redis/CDN for front-end resources.

- Smart indexing: composite indexes, slow-query monitoring, execution plans (EXPLAIN).

- Data archiving and pruning: retention policies, archive tables, and periodic reporting.

APIs and integrations — your gateway to growth

REST or GraphQL?

- REST: simple, easily cacheable, suitable for most scenarios.

- GraphQL: flexible, customized data fetching, ideal for multi-interface applications, but requires strong governance and prevention of expensive queries.

Developer-worthy API design

- Live documentation (OpenAPI/Swagger), usage examples, and rate-limiting policies.

- Organized versioning and a transparent deprecation plan.

- Layered security: OAuth2/JWT, webhook signatures, and IP allowlists where necessary.

User Experience (UX) as a profit lever

Clear journeys that reduce friction

- Journey maps from first visit to conversion, with quick usability tests every week or two.

- Discoverability: simple navigation, effective internal search, familiar UI elements.

- Speed of comprehension: clear error messages, precise instructions, and the minimum required fields.

Performance with mobile as your primary destination

- WebP/AVIF images, lazy loading, minimizing critical JavaScript delivery.

- Tracking Core Web Vitals (LCP/CLS/INP) with continuous improvements.

Software quality — from testing to code reviews

The right testing pyramid

- Unit: business logic.

- Integration: component/data interactions.

- Acceptance (E2E): real user scenarios.

- Performance/Stress: load tests for traffic peaks.

Code reviews and coding standards

- Clear pull-request templates, checklists, and automated linters/static analysis.

- Definition of Done (DoD): no feature is merged before tests and documentation are complete.

DevOps and CI/CD — small, frequent releases

A reliable delivery pipeline

- CI: automatic builds, tests, and coverage reports.

- CD: blue/green or canary deployments with instant rollback capability.

- IaC: infrastructure defined as reviewable, auditable files.

Monitoring and alerts before complaints

- Metrics (app/infrastructure): response time, error rate, resource usage.

- Structured logs: trace correlation IDs across services.

- Distributed tracing: end-to-end visibility of request flow inside the system.

Security and compliance — the foundation of trust and growth

Defense in depth

- Secrets management, key rotation, and the principle of least privilege.

- Interface protection: WAF, rate limiting, detection of common attacks (SQLi/XSS/CSRF).

- End-to-end encryption: modern TLS, encrypted storage for sensitive data.

Vulnerability lifecycle

- Third-party component checks (SCA), regular updates, and scheduled penetration tests.

- Responsible disclosure policies and channels for security researchers’ reports.

Privacy and compliance

- Clear privacy policy, a user dashboard to manage their data, and tracking of deletion/export requests.

- Minimize data collection to what’s strictly necessary (data minimization).

Cloud and cost management — optimized performance without financial bleed

Choosing a hosting model

- PaaS to accelerate launch and reduce operational burden.

- Kubernetes is used when teams/services grow and you need high flexibility.

- Serverless for intermittent/event-driven tasks with precise cost control.

Cost governance

- Standardized resource tags by team/environment/feature.

- Spend limits and alerts, monthly usage reviews, and autoscaling with hard caps.

- Use a CDN to reduce origin egress and lower latency.

Technical roadmap — how do you turn vision into reality?

From MVP to a mature product

- Focused MVP: solve a specific problem with near-term success metrics.

- Data-driven improvement: log hypotheses, test, and refine.

- Measured scaling: split overloaded services, optimize the database, and increase automation.

- Operational maturity: proactive monitoring, quarterly failure drills, and emergency exercises.

How do you measure the results of collaborating with a software company?

Measurable indicators

- Speed: launch time, release cadence, and average lead time for features.

- Quality: fewer incidents, test coverage, error rates.

- Value: growth in conversions/subscriptions, lower acquisition cost, and higher retention.

Sustainability: maintainability, clear documentation, and reduced reliance on specific individuals.

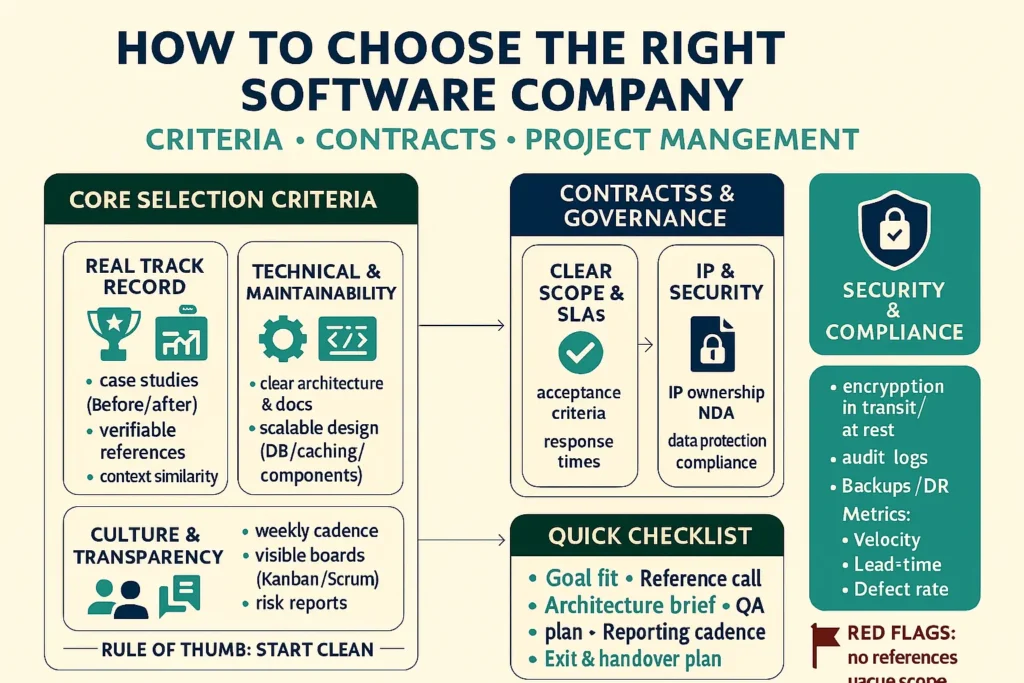

How to Choose the Right Software Company — Criteria, Contracts, and Effective Project Management

Core selection criteria — what exactly should you look for?

Choosing a software company isn’t based on price alone, but on expected value, execution quality, and sustainability of collaboration.

Real track record, not slide decks

- Documented case studies: before/after, clear performance figures (conversions, speed, incidents).

- Verifiable client references: brief contact with a former client in the same sector/size.

- Context similarity: experience in your environment (B2B/B2C, security requirements, multiple languages).

Technical depth and maintainability

- Clarity of architecture, coding standards, and documentation from the outset.

- Design that considers scalability (databases, caching, component separation).

- Realistic testing and QA plans (Unit/Integration/E2E/Performance).

Culture and transparency

- Steady communication cadence (weekly standups, sprint reviews).

- Visible work boards (Kanban/Scrum) and reports clarifying progress and risks.

- Receptiveness to feedback and quick adjustments to the roadmap.

How do you run the comparison? (A smart, concise RFP)

What to include in the Request for Proposal (RFP)?

- Business goals and measurable KPIs.

- Initial scope (high-level user stories, priorities not exceeding 8–12 core features).

- Technical constraints (required integrations, preferred cloud, security/compliance constraints).

- Timeframe and budget range (a range, not a fixed early figure).

- Collaboration expectations (meeting cadence, tools, responsibilities for both parties).

Interview questions that reveal depth

- How do you choose between a Monolith and Microservices for our project?

- What will you test first, and how will you measure early-release success?

- What’s the migration or integration plan with our existing systems?

- How do you manage scope change without derailing the project?

- Give an example of a past failure and how it was handled (institutional learning, not excuses).

Red flags

- Exaggerated timelines without technical discovery.

- Agreeing to “everything” without clarifying priorities or risks.

- Ambiguous ownership of code/data/infrastructure.

- No testing/security plans, or refusal to provide transparent reports.

Contract models — which fits your stage?

There’s no single “perfect” model; choose what balances risk and flexibility.

Fixed-Price

- When it fits: limited, well-defined scope; small MVP; standalone tasks.

- Advantages: financial certainty; commitment to an agreed scope.

- Challenges: scope changes are costly; risk of “minimum viable delivery” if margins shrink.

Time & Materials

- When it fits: exploratory projects, product-market fit research, changing scope.

- Advantages: high flexibility; pay for delivered value.

- Challenges: needs strong governance, budget caps, and productivity indicators.

Dedicated Team / Retainer

- When it fits: continuous growth, long roadmap, need for rapid releases.

- Advantages: accumulating domain knowledge; higher efficiency over time.

- Challenges: requires internal product leadership and clear priority management.

Outcome-Based

- When it fits: clearly measurable goals (response time, conversion rate, availability).

- Advantages: strong incentive alignment.

- Challenges: precise definition of outcomes and external factors.

Contract clauses you shouldn’t compromise on

Ownership and rights

- Ownership of code, documentation, and assets transfers to you upon payment.

- Prohibit the reuse of sensitive proprietary code with other parties when it’s your private IP.

Security and privacy

- NDA clauses, secrets management, encryption, and dependency reviews.

- Compliance requirements (privacy policies, user data rights).

Quality and service (SLA)

- Clear indicators: response time, availability, and incident fix times by severity.

- Post-launch bug-fix warranty with defined duration and scope.

Change and dispute mechanisms

- Formal change-request process (impact, estimate, approval).

- Escalation ladder for disputes (Project Manager → leadership → arbitration if needed).

Project management with a software company — the day-to-day that makes the difference

Clear roles and responsibilities

- Your Product Owner is to set priorities and accept deliveries.

- Vendor Project Manager/Scrum Master to run cadence and manage risks.

- Solutions Architect/Tech Lead to ensure cohesive architecture and technical decisions.

Tools and work rhythm

- Shared Kanban/Scrum boards, task tracking, and accurate ticket closure.

- Weekly short meetings, sprint reviews, and an evergreen roadmap.

- Weekly risk report (tech/time/cost) with clear containment actions.

Scope control without disruption

- Triage priorities: Must/Should/Could.

- Small change budget per sprint to capture opportunities without blowing the plan.

- Decision logs: why features were added/removed and their impact on schedule/budget.

Smart pricing — pay for what creates real value

How to evaluate proposals?

- Compare outputs/outcomes, not hours. Look for risk-reduction paths.

- Request an MVP plan with mandatory and phased items, plus detailed risk estimates.

- Watch for unhealthy pricing signals: big early discounts or vague invoices.

Where to save and where to invest?

- Save on early cosmetics and temporary manual processes.

- Invest in clean architecture, security, testing, and observability—these reduce cumulative cost.

After launch — support, improvement, and knowledge transfer

Post-launch support

- On-call schedule, alert channels, and fix priorities by severity.

- Monthly improvement plan based on actual usage data.

Knowledge transfer and autonomy

- Runbooks, architecture maps, deployment guides, training sessions for your team, and a tapered transition.

- Plans to reduce reliance on specific individuals (low bus factor).

30–60–90 Plan, Practical Success Metrics, and a Pre-Launch Checklist

30–60–90-day implementation plan with a software company

Days 1–30 — discovery, alignment, and laying the foundation

- Business discovery workshop: define clear, measurable goals (conversions, response time, support tickets) and initial KPIs.

- Turn the vision into an MVP scope: core user stories, Must/Should/Could priorities, and a 12-week roadmap.

- Informed tech choices: define the architecture (often a Modular Monolith at the start), database, essential integrations, and monitoring tools.

- Operational setup: code repositories, coding standards, initial CI for build/tests, and environments (Dev/Staging).

- Interactive prototypes: user flows, interface mockups, and quick usability tests with 3–5 potential customers.

- Early security plan: secrets management, access policies, transport encryption, and an initial risk matrix.

- Expected outcome: agreed MVP scope, initial design, ready environments, and a prioritized backlog—a solid base to start development.

Days 31–60 — MVP development, quality hardening, and content readiness

- Agile development with short releases: weekly sprints, sprint reviews, and incremental learning from metrics.

- Testing pyramid: Unit/Integration/E2E with critical scenarios, and initial performance testing.

- UI/UX: refine task flows, reduce steps to conversion, clear errors, and provide guidance.

- Performance setup: WebP images, front/back-end caching, DB indexes, slow-query tracing.

- Core integrations: payments/billing, email & marketing, analytics instrumentation (Events/Goals), and initial dashboards.

- Security readiness: dependency scans, WAF rules, rate limiting for sensitive endpoints.

- Expected outcome: an internally testable Alpha, basic test coverage, initial stable performance, and conversion event tracking.

Days 61–90 — public beta, performance polish, and controlled launch

- Limited Beta: early user cohort, feedback channels, and bug/idea board.

- Performance & security improvements: deeper compression, reducing critical JavaScript, light security audit (SAST/SCA), and stress tests.

- Operational readiness: rollback plan, tested backups, alerts/monitoring (latency/error rate/resource usage).

- Documentation & knowledge transfer: runbooks, architecture, deployment guide, and training for your team.

- Launch: blue/green or canary strategy, close monitoring of conversion/error metrics during the first 72 hours.

- Expected outcome: stable launch, active monitoring metrics, and a two-month post-launch improvement plan.

Keep reading and uncover secrets that can change the way you work. How to Differentiate Your Web Design Companies in Jeddah Using AI: The Ultimate Competitive Edge

How do you measure success? A practical dashboard

Development speed and quality (DORA-like metrics)

- Lead time from commit to production.

- Deployment frequency (preferably at least weekly in early stages).

- Change failure rate.

- MTTR (mean time to recovery).

Business value

- Conversion rate (sign-up/purchase/booking).

- CAC (customer acquisition cost) — should decrease as journeys improve.

- Retention/Churn for subscriptions or returning users.

- ARPU/LTV depending on your model (SaaS/Commerce).

User experience and performance

- Core Web Vitals: LCP/CLS/INP within recommended thresholds.

- Crash-free sessions and front-end error rates.

- NPS/CSAT via short in-product pulse surveys.

Golden rule: make the dashboard visible to all parties (weekly), and set priorities based on data, not impressions.

Keep reading and uncover secrets that can change the way you work. Our Services for Launching a Website in Riyadh: Smart Design and AI-Powered Marketing for Rapid Success

Pre-launch checklist

Product & functionality

- Core user stories completed and E2E-tested.

- Conversion paths shortened and instrumented (Events/Goals).

- Error/maintenance pages and search/filter functions are working on mobile and desktop.

Performance & tech

- Compressed images (WebP/AVIF), lazy loading, and appropriate caching.

- DB indexes and slow-query monitoring enabled.

- Light stress tests for peak scenarios.

- Tried-and-tested rollback path.

Security & compliance

- Modern TLS, secrets management, and least privilege.

- WAF and rate limiting for APIs.

- Updated privacy policy and user data-management UI where needed.

- Third-party dependency scans and critical updates applied.

Analytics & tracking

- GA4 (or alternative) configured with custom events.

- Defined conversion goals, UTM campaign tags, and ad integrations if needed.

- Executive KPI dashboard.

Operations & support

- Runbook, architecture map, documented deployment steps.

- On-call schedule, severity levels, and internal SLA.

- User feedback channel and weekly improvement plan for the first two months.

Keep reading and uncover secrets that can change the way you work. Types of Websites: How to Choose the Best Fit for Your Company

When to consider expanding collaboration with a software company?

Signals to scale up

- Backlog congestion and lost value due to limited capacity.

- User growth exceeding current performance thresholds (heavy reads, complex reports).

- The need for new integrations/additional channels (mobile apps, kiosks, partner integrations).

How to scale without waste?

- Extract one high-load service (Payments/Reporting) into an independent service with cost-impact measurement.

- Adopt feature flags for gradual rollouts.

- Quarterly architecture and cost reviews with the vendor to keep the course aligned.

Turn your goals into real achievements with our tailored services – request the service now.

Quick common questions (Concise FAQ)

Start big or small?

Start with a focused MVP that validates core hypotheses, then expand based on usage data—not expectations.

How do I ensure the software company doesn’t “lock me in” technically?

Require ownership of code and documentation, clear coding standards, reproducible deployments for your team, and training before handover.

What if scope changes during execution?

Use a formal change mechanism with impact/estimate/approval, and keep a small change budget per sprint to capture opportunities without disruption.

Conclusion: A partnership that builds a product to last

Choosing a successful software company isn’t a one-off transaction, but a partnership that turns vision into measurable reality. With a 30–60–90 day plan, a transparent dashboard, and clean architecture, every line of code becomes an investment in speed to market, experience quality, and scalability. Don’t look for the cheapest; look for the team that increases your odds of success with lower risk and higher long-term ROI.